Yesterday, Apple CEO Tim Cook published a letter to Apple customers, in response to an order given by the United States Government directing Apple to provide technical assistance to federal agents attempting to unlock the contents of an iPhone 5C that had been used by Rizwan Farook, who along with his wife, Tashfeen Malik, killed 14 people and wounded 22 others on December 2 in San Bernardino, California.

A United States Magistrate judge in Los Angeles has upheld the government order, clearing the way for certain appeal by Apple to the 9th U.S. Circuit Court of Appeals, which is notoriously pro-privacy, and possible final appeal the the United States Supreme Court.

Cook’s primary concern is not around the technical assistance Apple might provide in decrypting information contained on that single phone (in fact, according to an Assistant United States Attorney Apple has already complied with over 70 such requests since 2008, see article here), but instead around the ensuing creation of legal and technical precedents which could require manufacturers to provide government agencies with encryption “back doors” in upcoming iOS releases. If upheld, the government’s order, which is argued using a rarely invoked 1789 congressional statute, could indeed provide legal precedent for the U.S. Government to require encryption back doors to be engineered into any product created by any manufacturer.

Reading Tim Cook’s letter immediately got me wondering: if the government prevails in its case, and these back doors become required, what would be the effect on the medical videoconferencing industry? As is so often the case, the devil is in the details.

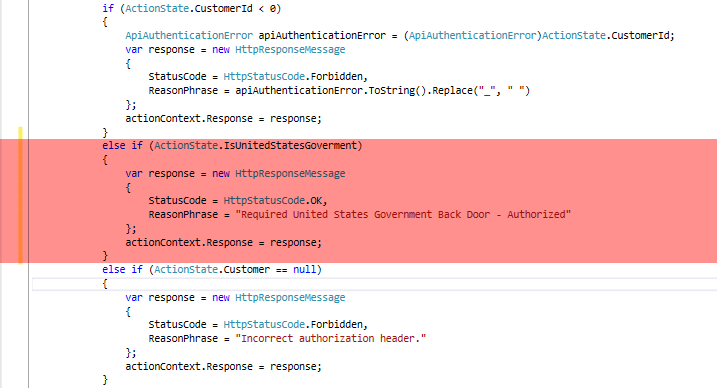

To be feasible for a given videoconferencing product, encryption back doors would require three technical and operational conditions:

1) The creation by the product manufacturer of a master key which, when used, would provide to the holder of the master key the session key for an encrypted video session; and,

2) The provision of that master key by the manufacturer to a government agency upon proper request; and,

3) The possession of the encrypted data stream by the government agency.

The first condition is not terribly difficult to meet. Private session keys are required for every encrypted session, and thus the provision of those keys based on an authenticated master key is, at most, an implementation detail.

The second condition would require, one hopes, some detailed legal prophylaxis to ensure that the master key is used only in certain clearly defined, and relatively rare, circumstances. Of greater concern, however, would be the safeguarding of the master key. If one criminal, foreign agent, or manufacturer or government employee gained access to the master key for a device, the security for that device would be compromised until the master key could be changed. If the unauthorized access was obtained without the manufacturer’s awareness of the breach, then the security for that device would be compromised for an indefinite period of time.

The third condition requires that the data stream be accessible by the manufacturer, or the customer to whom the manufacturer has sold or leased the product. Of the three conditions, this is the trickiest for the government, because access to the encrypted data stream will differ for each product, deployment model, and customer. Gaining access to a video stream from an MCU hosted in the cloud by Manufacturer A is as simple as gaining network access to the Manufacturer A data center, which Manufacturer A would presumably be required to grant. However, gaining access to a peer-to-peer stream being transmitted directly from one computer to another would require foreknowledge of the internet routes to be taken by the video packets, which ranges from unlikely to impossible depending on the specifics of the connection.

If the government prevails in its case, it is possible that every large videoconferencing vendor using a Multi-point Control Unit (MCU) would be required to construct a back door, thus eliminating the possibility of absolute privacy in MCU-based videoconferencing systems, except those hosted in data centers to which the United States Government cannot demand access, or those manufactured by smaller vendors to whom the government has not applied the requirement (due to oversight, undue burden, or lack of volume). The vast majority of videoconferencing providers use some kind of MCU technology, and so it is not difficult to imagine these vendors eventually offering offshore cloud-based MCUs, or customers with On Premise deployments deciding to host part of the infrastructure offshore. Cloud customers would have the option of paying less for optimal performance where video streams would be subject to government capture and decryption, or paying more for sub-optimal performance where video streams would not be subject to government capture and decryption.

Customers of peer-to-peer systems such as SecureVideo/VSee, on the other hand, would not be affected by MCU access, because there is no MCU in a peer-to-peer system. While the government could require the provision of a master key, the government in most cases would not be able to capture the encrypted packets, and could therefore not gain access to the encrypted video streams.

As to the likely market reactions, your guess is as good as mine. Videoconferencing customers generally may not care about possible government decryption of their video streams. It is possible that most videoconferencing customers won’t care, but medical videoconferencing customers will care deeply, based on the possibility of a master key breach putting massive percentages of protected health information into unauthorized hands. If this happens, I would expect many of them to explore peer-to-peer technologies such as ours. At the very least, depending on what happens with Apple’s appeals process, this is a very important development for medical privacy professionals to keep an eye on, with respect to both videoconferencing as well as other affected technologies such as mobile devices, full disk encryption, cloud storage, secure web transactions, and whatever else you can think of that has encryption as a security underpinning.